Reinforcement Learning and Model Predictive Control

Although Luna relies on a broad range of sensors delivering real time feedback for direct control, we are planning to enhance the capabilities and real world Application. There are two distinct ways to improve those Aspect through Machine Learning (ML), on one side we use auxiliary ML models to increase the reliability of our system as a whole through using additional sensors to improve main sensors readings. The other application covers a broader range of application through learning Agent Policies that can control luna on their own and follow given commands such as moving in a certain direction or rotating on the spot. Through the implementation of digital twin we were able to test our software stack and hardware plans before starting the costly process of creating hardware prototypes, therefore increasing the amount of time we needed to get our first working system into a prototype state.

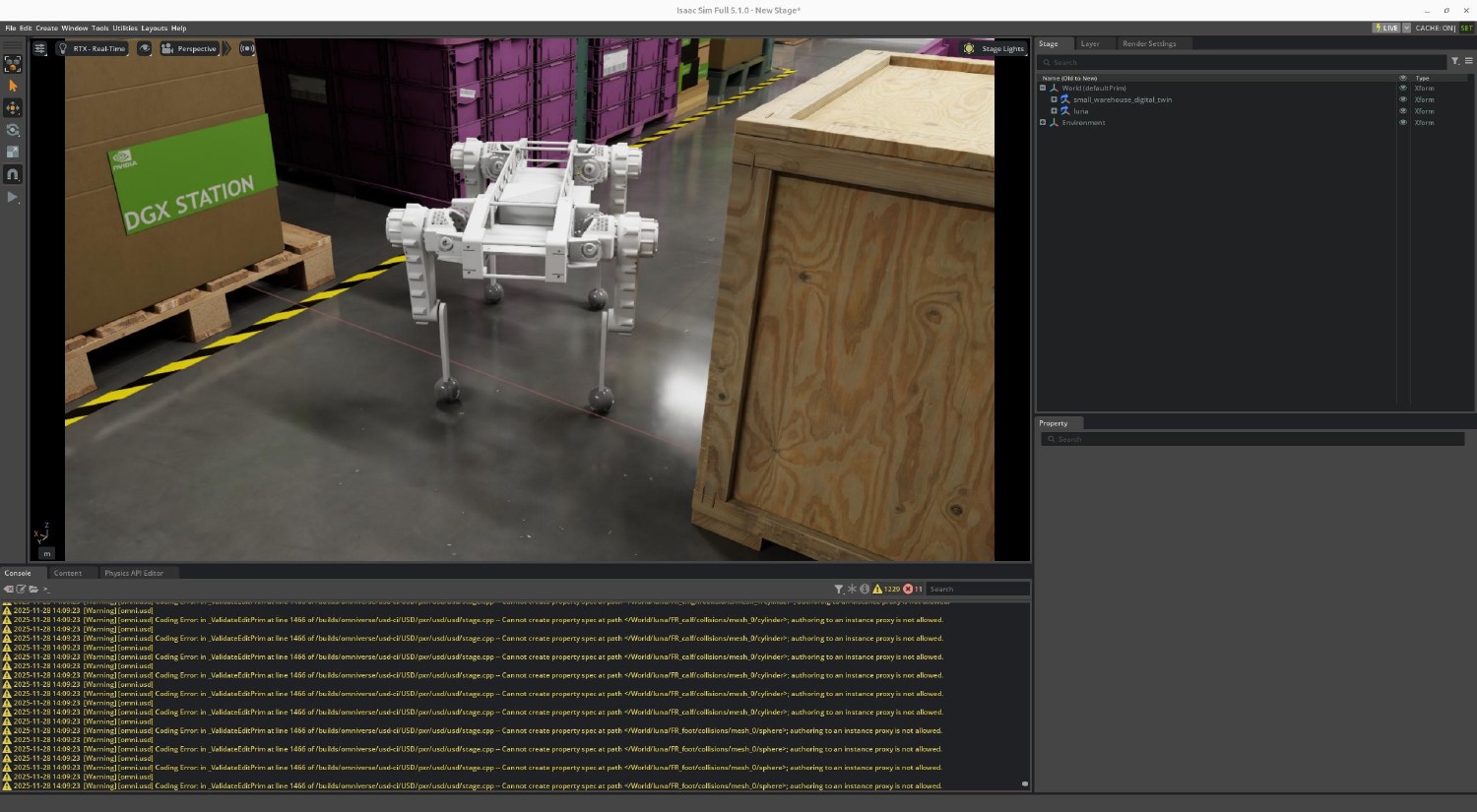

Luna in NVIDIA Isaac Sim

Agent Policies are being trained using Simulation Technology provided by Nvidia to make use of current Algorithms that have been proven to be best suited at delivering reliable performance in various real world scenarios. Though these methods have been show to be easily deployable on common Robotics Hardware, it takes continuous effort to deploy these policies on custom hardware such as our in house developed luna quadruped platform.

The latest addition of our Auxiliary machine learning sweet shows a good example of what is possible through careful data collection and refinement. We were able to train a ML model that predicts foot contacts only through data provided by internal sensors, already integrated into the current motor configuration. We collected sensor data from a real world deployment of luna walking on a flat surface and learned to predict food contacts based only on motor sensor readings.

Luna in NVIDIA Isaac Sim

Although Luna relies on a broad range of sensors delivering real time feedback for direct control,

we are planning to enhance the capabilities and real world Application.

There are two distinct ways to improve those Aspect through Machine Learning (ML),

on one side we use auxiliary ML models to increase the reliability of our system as a whole through using additional sensors to improve main sensors readings.

The other application covers a broader range of application through learning Agent Policies that can control luna on their own and follow given commands such as moving in a certain direction or rotating on the spot.

Through the implementation of digital twin we were able to test our software stack and hardware plans before starting the costly process of creating hardware prototypes, therefore increasing the amount of time we needed to get our first working system into a prototype state.

Agent Policies are being trained using Simulation Technology provided by Nvidia to make use of current Algorithms that have been proven to be best suited at delivering reliable performance in various real world scenarios. Though these methods have been show to be easily deployable on common Robotics Hardware, it takes continuous effort to deploy these policies on custom hardware such as our in house developed luna quadruped platform.

The latest addition of our Auxiliary machine learning sweet shows a good example of what is possible through careful data collection and refinement. We were able to train a ML model that predicts foot contacts only through data provided by internal sensors, already integrated into the current motor configuration.

We collected sensor data from a real world deployment of luna walking on a flat surface and learned to predict food contacts based only on motor sensor readings.

We also want to experiment with other control strategies like Model-Predictive-Control. For this we are deploying a MPC solver from IIT Milano on Luna which will serve as a good baseline for locomotion. To this end, we are collaborating with the KIT Robotics institute IAR-HCR in a masters thesis, where we aim to enable this solver to work on lower-end hardware and with higher control frequency through GPU enhancement of its underlying mathematical solver, acados. Stay tuned for developements and publications about this topic!